Blog » Rewriting Minefield Mahjong in Rust

Rewriting Minefield Mahjong in Rust

In order to learn Rust better, I decided to rewrite one of my previous projects, Minefield Mahjong. The game was originally written in Python, so I had an opportunity to see which ideas translate well and what needs to be different.

As a disclaimer, I'm pretty new to Rust. If something didn't work out for me, or I made a mess, it's very likely that there is a better way that I haven't found. Still, I thought it might be interesting to share what I noticed.

What is Minefield Mahjong?

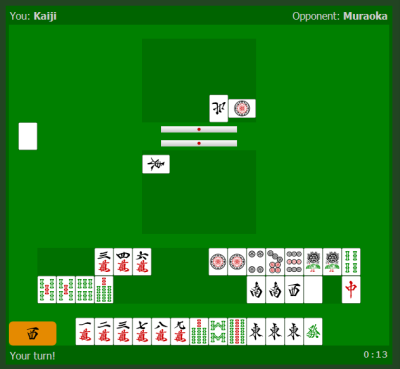

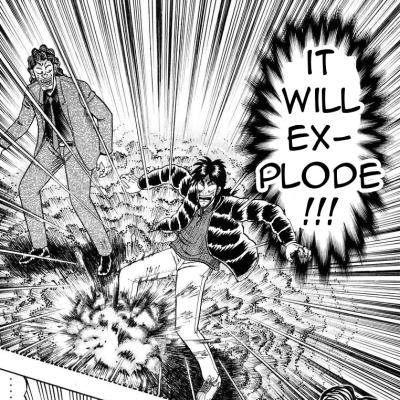

Not long after Kaiji wins his freedom from the sinister Teiai Corporation, he is pulled into another crazy gamble. Invited by two of his friends, Kaiji is going to take on Takashi Muraoka, a shady casino owner, in a game of Minefield: a high-stakes two-player variant of Riichi Mahjong.

Kaiji-kun… that won't be a problem! You see, my mahjong games take no time at all!

Minefield Mahjong is a quick and tense game. After the two players prepare their initial tiles, they have to take "17 steps over the mine field", trying to play a tile that will not cause the other player to win. One misstep and you lose a huge amount of money.

Nobuyuki Fukumoto, author of Kaiji and Akagi, can make even a game of Rock Paper Scissors look incredibly captivating, but mahjong is where he really spreads his wings. The match goes on for 13 manga volumes, and there really are enough clever plays and plot twists, dramatic moments and detailed analyses, that you don't feel like it overstays its welcome.

The project

The Minefield Mahjong project started back in 2013 as a hackathon I organized with a few friends. While the game is pretty niche, the idea sounded interesting: an HTML5 game, multiplayer, over the network. I remember we used socket.io because that was the popular technology for network communication (websockets weren't fully supported everywhere yet), and Python on the server because most of us didn't know JavaScript well enough to be comfortable with it on the server.

During one day, we managed to build a "mahjong rules" engine and start the UI. We never repeated the hackathon, and the development slowed down a bit, but a friend finished the rules engine and was able to play using a terminal.

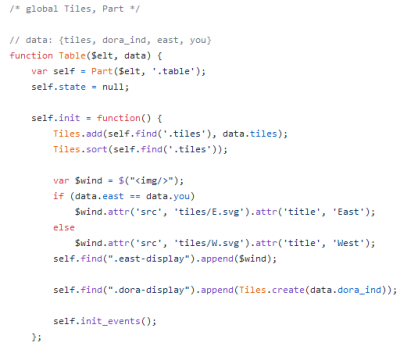

I picked up the project a few months later and was able to get it to a workable state. Drawing on the work experience from Codility candidate interface, I wrote a hacky "jQuery soup" style frontend, and the project was ready to be published.

After that, Minefield turned out to be a great playground for new ideas. One of them was persistence: the games were stored in memory, but I wanted to handle server restarts. I ended up storing games in an SQLite database and implementing a "replay" scheme for reconnecting clients.

Later, after I learned a bit of React and Redux, I rewrote the frontend. I was really happy with the improvement: not only rendering was much easier, but the whole state and network message processing code was completely separate and could be tested in isolation.

Some other improvements followed. I replaced socket.io with a "pure" websocket engine. A friend ported the code to Python 3. Still, the codebase is kind of a mess: there is a spaghetti of objects calling each other, and I never hunted down a leak that caused some abandoned games to stay on the list, or a bug that caused the bot to die occasionally. I just restarted the server from time to time.

Minefield always used gevent, a Python library that executes "normal" code asynchronously: this is good for websockets, because you don't have to spawn a separate thread for every connected user. Nowadays, the canonical way is to do that using asyncio (with special functions defined as async), so I started to port the code over, but soon ran into a wall. Because of the callback-driven style, the game logic is actually indirectly calling the server code, and as a result the async thing is infecting everything: if sending a message is an asynchronous operation, then every other function also has to be async.

It's not such a big deal as it sounds, it wouldn't be that hard to refactor the project. However, it gave me a good excuse to try out something completely different. I decided to try and rewrite the whole server in Rust. The project was big enough for me to learn something new, but also well-defined and easy to test: I would be connecting the same JavaScript frontend to the new server.

Welcome to Rust

What do you notice first when coming from Python to Rust?

Of course, first of all, the code is statically typed. Every function has to be annotated, every type has to fit. There is type inference for local variables, so you can still declare them with just let, but the type has to be unambiguous from the context.

I really like how everything is immutable by default. You cannot modify a variable unless it's declared as mut:

fn powers_of_two(n: usize) -> Vec<usize> {

let mut result = vec![];

let mut current = 1;

for i in 0..n {

result.push(current);

current *= 2;

}

result

}

That's much better than the other way around (having to use const). It's not like you shouldn't use mutable variables, but you probably won't use them if you don't need them. Same with public/private: the default is private, and you need to use pub if you want your code to be visible outside.

There are methods, sort of, in that you can declare a function on a type with the familiar foo.bar() syntax. There is no run-time polymorphism, though (at least not until you ask for it). If something has a type Foo, you can be sure it has these specific fields and so on.

However, there are both generic types (such as Vec<usize> above), and traits which can be implemented by many types. For instance, you can create your own type implementing Iterator<Item = usize>, and it will work with any code that expects T: Iterator<Item: usize>.

The most famous are Rust's value semantics and borrowing rules. You can pass values around, but they will be moved, not copied. And you can create a reference to them, but they follow a readers-writer lock pattern: at a given time, you can either have many immutable references, or a single mutable reference, but not both.

let mut foo = vec![1, 2, 3];

use_foo(foo);

// foo.push(4); // can't use foo anymore, it has been passed to use_foo()

let mut bar = vec![1, 2, 3];

for i in bar.iter() {

...

// bar.push(4); // can't modify bar, it's borrowed by iter() above

}

bar.push(4); // can modify bar again

The rules about references get more complicated when you have objects referring to each other, but for simple cases, they are still easy to follow. The interesting part is that they push you towards a specific style of programming. For instance, you will be much more hesitant to write methods that mutate your data (take &mut self), because it's harder to combine them.

Implementing the rules

I started the rewrite by working on the mahjong rules. There is a lot of code there that analyses all possible arrangements of tiles. In Python, you can often get by using generators:

def find_pairs(tiles):

for i in range(len(tiles)-1):

if tiles[i] == tiles[i+1]:

yield ((tiles[i], tiles[i+1]), tiles[:i]+tiles[i+2:])

find_pairs([1, 2, 2, 3, 4, 4])

# -> [2, 2], [1, 3, 4, 4]

# -> [4, 4], [1, 2, 2, 3]

Combining a few functions like that is a pretty easy way to implement Prolog-style backtracking: call find_pairs (and find_groups) repeatedly, and you can generate all possible decompositions of a set of tiles.

Unfortunately, generator functions do not really exist in Rust (although there are experiments). There are iterators, and combining them using .map(), .filter() and so on works well, but defining your own iterator from scratch is harder.

Look at Python's itertools library - there are a lot of different combinators like zip(a, b), repeat(a), all defined as simple Python functions (even if the real implementation is something else, for efficiency). There are also recipes for more. There is a similar library for Rust, also called itertools, but most of the combinators there define their own type - Zip<A, B>, RepeatN<A> and so on. Doable, but much less convenient.

So that's all to say I really miss the generators from Python. In many cases, where I could just generate all possibilities at once, I wrote functions that return a Vec, because that seemed easiest. For the backtracking algorithm, I wrote a generic function that takes a BacktrackStrategy:

pub trait BacktrackStrategy {

type Result;

// Generate all the possibilities to try out in a given state

fn generate(&self, state: &State) -> Vec<Possibility>;

// Check if there are results

fn check(&self, state: &State) -> Vec<Self::Result>;

}

// Run the strategy

fn backtrack<T>(&mut state, strategy: &T) -> Vec<Result>

where T: BacktrackStrategy

{ ... }

The backtrack function will repeatedly call generate and check, collecting all the results.

I suspect it's not a very Rusty solution. It's something more like the Strategy pattern from OOP. I could also define generate and check as function arguments passed to backtrack directly, but it doesn't make the code any simpler.

Enums and error handling

Some of the original Minefield's more problematic code was in network message handling. It looked something like this:

class Game:

def on_message(self, msg_json):

msg = json.loads(msg)

type = msg['type']

args = msg['args']

getattr(self, 'on_' + type)(*args)

def on_new_game(self, nick):

...

def on_discard(self, tile):

...

It does the job, but it's very… dynamic: there is no parameter validation, no easy way to check if you missed or misspelled something, and so on. And probably passing something else in the JSON (like an array where a string is expected, or vice versa) can cause all kinds of funny behaviour.

In Rust, I created an enum type for the message, with all the possible variant specified:

enum Msg {

NewGame { nick: String },

Discard { tile: Tile },

...

}

fn on_message(..., msg: Msg) {

match msg {

NewGame { nick } => ...,

Discard { tile } => ...,

}

}

If a JSON library gives me a Msg, all the fields will have the right type. Also, the match is required to be exhaustive: if I add a new message type, and I'm not handling it in the match, the compiler will tell me to go back and fix that.

Rust's type system is quite good at making invalid states unrepresentable. If something cannot happen, maybe you can modify your type system so that it cannot even be constructed. For example, look at the following definition:

struct Hand {

// 4 triples and a pair, OR seven pairs

groups: Vec<Group>

}

enum Group {

Triple(Tile), Pair(Tile)

}

You can add run-time assertions to check if "4 triples and a pair OR seven pairs" holds, but it doesn't guarantee that the code will not crash. But what if you could ensure that only these types of hands can be represented?

enum Hand {

Normal { triples: [Triple; 4], pair: Pair },

Pairs { pairs: [Pair: 7] },

}

struct Triple(Tile)

struct Pair(Tile)

Constructing a Hand like this is going to be more complicated, but now instead of the English-language definition of a valid hand, you have the same encoded in Rust and checked for you automatically.

Having to be always so explicit is not always easy. In some cases, I wasn't able to "encode only the possible states", and ended up, for instance, with an Option value that I manually unpack by calling value.unwrap():

struct GameRoom {

game: Option<Game>;

}

// I can check if the game is there:

if let Some(game) = room.game {

...

}

// Or, if I'm sure it has:

let game = room.game.unwrap();

This is less elegant, as failure will instantly abort the program, but usually it's exactly what I want in this case: I have a programmer mistake, not a user error, and there's no easy way to recover.

Serialization

Okay, so I created the pretty enum above, but how do I read and write it to JSON? Over the websocket, I'm sending messages in the following format:

{ "type": "new_game", "args": ["Akagi"] }

{ "type": "join", "args": ["Kaiji", "key"] }

In the Rust world everyone uses a serialization framework called serde. It's very modular: you specify serialization in terms of Serde's own "type system", and it generates a series of macros for you. Then, you use one of the many "backend" libraries for a specific format like YAML, JSON or MessagePack.

Still, the existing format of messages looks pretty far from my new enum definition. I tinkered a bit with Serde's options, and it turns out I can get pretty close by passing a few options:

#[derive(Serialize, Deserialize)]

#[serde(tag = "type", content = "args")]

#[serde(rename_all = "snake_case")]

pub enum Msg {

NewGame(String),

Discard(String, String),

...

}

That gives me… almost what I want, but the args is different. Sometimes.

{ "type": "new_game", "args": "Akagi" }

{ "type": "join", "args": ["Kaiji", "key"] }

Unfortunately customizing the serialization further wasn't that simple. If I wanted the format to stay exactly the same, I would have to either:

- Write my own serializer, with a

matchover all possible messages. There's about 20 of them, so that's not a good idea. - Do a pre/post-processing phase on the "args" array to handle it correctly.

I tried the approach number 2 for a while, and ended up with some awful code full of special cases for 1-element array, 0-element array, some random empty dict {} in one of the cases, and on. It turns out that I really wasn't careful with sending the messages in a regular format, just wrote whatever made sense at the time, in the language I was using - so messages originating from Python had one format, the ones originating from JavaScript had another, and the code wasn't exactly very strict on what fields it required.

So I scrapped all of that preprocessing, and changed the format slightly in Python and JavaScript so that it followed Rust conventions. Now I'm able to serialize messages with no custom code.

By the way, this is where I noticed I didn't have the protocol written down anywhere. I had to fish out the possible messages and their fields from different parts of the code. So I'm really happy I now have all the messages in the protocol defined in one central place.

I haven't done a lot with Serde, but I'm pretty happy with it. Later, when I wanted to save the games to disk, it literally took me only half an hour to write saving and loading the games.

Game architecture

Back when I was writing the Python Minefield, I wanted to have a "game" class that would just handle the game rules, and keep it isolated from all the real-life stuff like socket communication. So my idea was to use a callback:

class Game:

def __init__(self, callback):

self.callback = callback

def start(self):

# Send start_game to both players

self.callback(0, 'start_game')

self.callback(1, 'start_game')

...

That worked reasonably well, and I was able to write tests for many different game scenarios before working on rest of the code. But then, I realized my server would handle multiple games, and each of the games would have two players, so I needed one more class: a game room. Very roughly, it was something like this:

class Room:

def __init__(self):

self.game = Game(callback=self.on_game_message)

self.sockets[0] = Socket(callback=self.on_player_message)

self.sockets[1] = Socket(callback=self.on_player_message)

def on_player_message(self, message):

self.game.on_message(message)

def on_game_message(self, i, message):

self.sockets[i].send(message)

It's starting to look ugly. Here is a full path of a message and responses between objects:

- We receive message (

msg = socket.receive()) and pass it toroomroom.on_player_message(msg)executes, and passes the message dogamegame.on_message(msg)executes, processes the message, and prepares 2 messages in response- The first response is passed to a callback registered in

game, which is inroomagain…room.on_game_message(response_1)executes, and passes the message to its own callback, which is insocketsocket.send(response_1)sends the response back to player

- The second response is also passed to the same callback…

room.on_game_message(response_1)executessocket.send(response_2)sends the response back to the other player

And that's not even taking into account that players can join and leave rooms, create new ones, reconnect, and so on.

Fortunately, this is something that Rust will not allow us to do easily. See the room calling game which calls room again? That looks like we are trying to use ("borrow") room again, while it's still in use! Maybe after a lot of fighting I would find a way to make it work, but at this point I'd rather look for a simpler solution.

What's simpler is to return the response: instead of registering callbacks, give back the messages to the layer above to handle. Here is how it would look like:

- We receive message (

msg = socket.receive()) and pass it toroomroom.on_player_message(msg)executes, and passes the message dogamegame.on_message(msg)executes, processes the message, and prepares 2 responses- … the responses

[response_1, response_2]are returned toroom

- … the responses

[response_1, response_2]are returned tosocket

- We send all the responses back:

socket.send(response_1)socket.send(response_2)

In Python, I would probably write generators and yield the messages back, but in Rust I'm content to just return an array (Vec) of messages.

In the code, it looks a little clumsy, but it does the job. I am very happy that I managed to write a lot of "server" code that is still pure and does not involve any network communication yet.

Websockets

Network communication was the next part. It turned out that it's not that hard to do websockets in Rust, but it is a bit harder if you want to have an HTTP server that serves static files and also allows upgrading a connection to websockets. Especially if you want the code to be asynchronous - async is still quite young in Rust.

I ended up using two libraries (hyper for HTTP and tungstenite) for Websockets, but gluing them together myself. Now I know what it means to support websockets! You have to:

- Verify the right headers in the HTTP request (

Connection: upgrade,Upgrade: websocket, …), - Reply with a special response (

101 Switching Protocolsand right headers), - Spawn another thread / async task that will use the existing connection as websocket stream.

The websocket connection will need some state. Rust will not allow me to just create a struct Server and pass it to another task - that could be unsafe! There are special markers (Send and Sync) that say what kind of data I'm allowed to send or share between tasks. As a result, I had to wrap my data in:

- a thread-safe reference-counting pointer (

Arc), so that it's not dropped too soon, and - a

Mutexthat will ensure only one task will hold the data at a time.

Same as C++, mutexes follow Resource Acquisition Is Initialization, which means I don't have to worry about unlocking them manually. They are unlocked after a variable goes out of scope:

// a handle to my Game

let mutex: Mutex<Game> = ...;

{

let game_handle = mutex.lock().unwrap();

// now I can use game_handle

game_handle.start_game();

...

}

// game_handle goes out of scope, and the mutex is unlocked

So how does it work together? I have the following pieces of code (tasks) running:

- The HTTP server that is receiving requests,

- For each websocket sonnection, a "reader task" that is reading the messages and passing them to Game,

- For each websocket sonnection, a "writer task" that is pumping the responses back to the user,

- A special "beat task" that fires every second and handles things like time limits.

What's great is that this part does not need to know anything about game rules, joining rooms and so on. The network code and the logic code are completely separate.

Launching

The ultimate test of the game was connecting it to the existing JavaScript client. Fortunately, there were also plenty of opportunities to test before that. For many scenarios, I didn't even have to think too hard about the tests, just translate them from Python. Thanks, 2013 Minefield authors!

I was also able to write a bot and connect it to the Python server. That was an important check for the protocol. The Rust implementation, once it compiled, more or less worked, but network communication was crashing all the time because of JSON format mismatches that I had to fix.

What's nice is that I could already see the difference in performance. The old Python bot sometimes took 30-60 seconds to prepare its initial hand. The Rust bot, following the same algorithm, was done in one second.

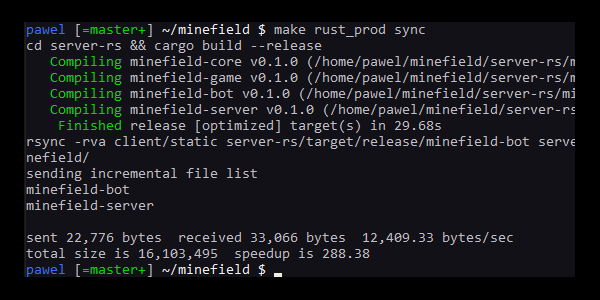

When everything was done, I published the Minefield bot and the game server on my website (https://pwmarcz.pl/minefield/). That was also a welcome change from Python: before, I had to push all the source files using git, then run pip install to upgrade the Python packages. The new deployment is just copying two binary files.

Trait methods

I am a bit unhappy about the amount of "implicitness" in Rust. When you call foo.bar(), the bar method does not have to come only from the type of foo, but any other traits that are implemented by that type.

That makes a lot of sense because it's how you can have arithmetics, comparison, ordering, I/O and so on, on many different types, including the new ones that you define. However, it also means that it's easy to add new methods, and there are many libraries that add convenient traits like StreamExt for stream handling, or SliceRandom for permutations:

use rand::seq::SliceRandom;

let mut rng = rand::thread_rng();

let mut numbers: Vec<usize> = vec![1, 2, 3, 4, 5];

// the shuffle() method comes from SliceRandom

numbers.shuffle(&mut rng);

To be fair, you do have to import the trait before the method is available. Still, just looking at the code, there is no way of telling where the method came from. That's a pretty big departure from Python's "explicit is better than implicit" in a language that otherwise tries to follow this rule.

Libraries

Another difference with Python is the approach toward standard library. Python is famously "batteries included" and a lot of things can be implemented without installing anything extra. In contrast, Rust's library is deliberately small, and you need a separate library for things like asynchronous programming (futures), random number generation (rand, then rand_distr for things like normal distribution), logging (log)…

And of course all of these libraries pull their own dependencies. As a result, Minefield declares use of about 15 libraries (crates), and the build process pulls about 200 of them. While I suspect the libraries' quality is better than over at NPM, and the ecosystem seems pretty healthy overall, it makes me a bit uncomfortable.

Summary

Rewriting an existing project was a weird experience. I had the Python implementation to lean on, so I'm sure some of my solutions were a bit lazy and more Pythonic than Rusty. Still, I feel that the project was big enough to allow me to learn a fair chunk of Rust and get a feeling for the language.

Many things ended up more verbose than Python, and that didn't surprise me. However, what was a bit surprising is how much Rust is a C++, not a C. I had an image in my mind of something simple and low-level like C, with all the "unsafe" bits solved somehow. Instead, I see there is a lot of effort to introduce proper abstractions and allow the user to program on a higher level.

If you want to play the game, here is the link again: Minefield Mahjong. And you can find the source code at Github. Thanks for reading!